Background

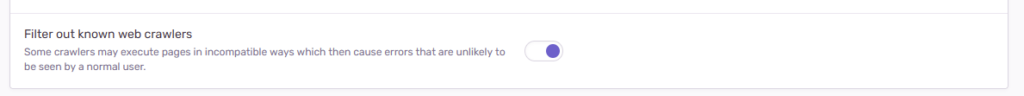

When you use Sentry’s Inbound Filtering feature, there is an option to filter out “known web crawlers“.

Nothing is documented about the criteria that causes an event to be categorized as such. So this post will find them out.

Research

At time of writing, Sentry’s main source code is hosted at GitHub repo called “getsentry/sentry“. It should be as easy as typing in “crawlers” in GitHub search box, right?

Not really.

When I did that, I only found out the kinds of inbound filters: src/sentry/ingest/inbound_filters.py

class FilterStatKeys:

...

WEB_CRAWLER = "web-crawlers"

FILTER_STAT_KEYS_TO_VALUES = {

FilterStatKeys.WEB_CRAWLER: tsdb.models.project_total_received_web_crawlers,

...

}

_web_crawlers_filter = _FilterSpec(

id=FilterStatKeys.WEB_CRAWLER,

...

)However, GitHub code search feature is really awesome. It is possible to expand the search to the whole organization. When I did that, the first result is our jackpot: relay-filter/src/web_crawlers.rs at their “getsentry/relay” repo. Looks like that repo is the service to do event processing (and filtering).

Results

So, from the list at relay-filter/src/web_crawlers.rs, an event is categorized as “web crawlers” if the event has user agent and the user agent matches the following rule (at time of writing, 10 Oct 2022):

- Mediapartners-Google

- AdsBot-Google

- Googlebot

- FeedFetcher-Google

- BingBot

- BingPreview

- Baiduspider

- Slurp

- Sogou

- ia_archiver

- anything that ends with “bot” or “bots”

- anything that ends with “spider”

- “Slack” but not “Slackbot”

- Calypso AppCrawler

- pingdom

- lyticsbot

- AWS Security Scanner

- HubSpot Crawler

Conclusion

Thanks to Sentry being open source and GitHub being able to do code search across their organization, I found out the rules of user agents that makes an event being categorized as “web crawlers”. The list is documented in the previous section. Hope it helps :)